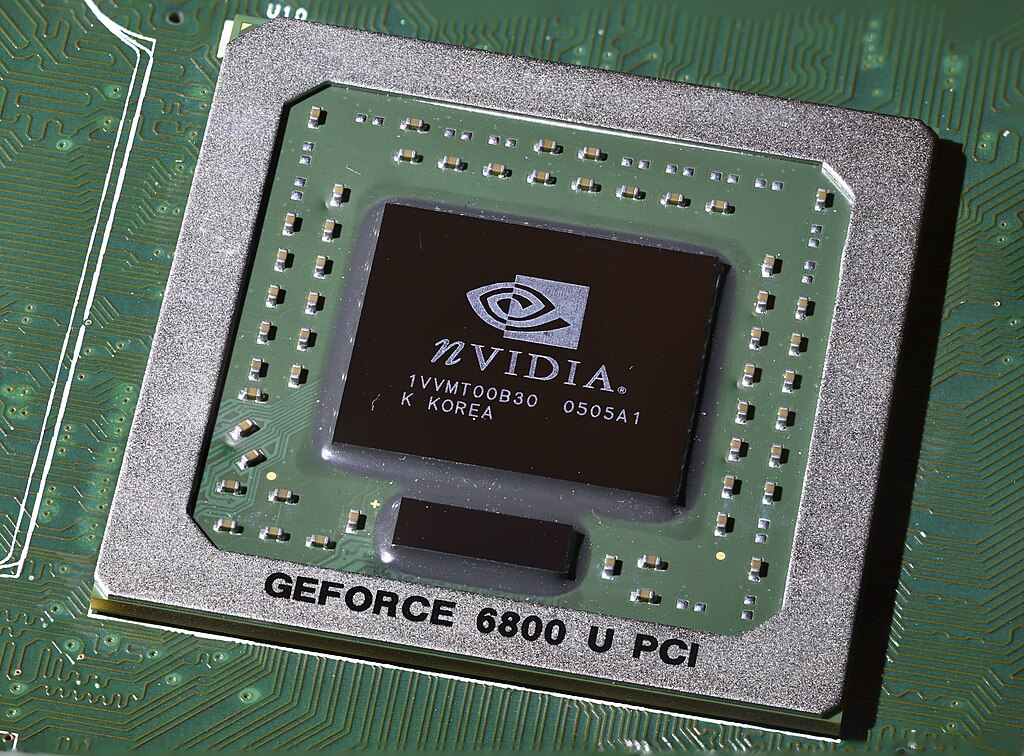

Reports earlier this week suggested that Nvidia (NASDAQ: NVDA) had agreed to acquire AI chip startup Groq in a $20 billion all-cash transaction. However, updated details clarify that the arrangement is not a traditional acquisition but a strategic, non-exclusive inference technology licensing agreement aimed at accelerating artificial intelligence inference at global scale.

Groq, a designer of high-performance AI accelerator chips founded by former Google TPU engineers, confirmed that Nvidia will license its inference technology rather than purchase the company outright. The agreement focuses on expanding access to fast, predictable, and cost-efficient AI inference, an area gaining importance as AI workloads shift from training to deployment. Groq will continue to operate as an independent company, with Simon Edwards appointed as chief executive officer.

As part of the deal, Groq founder Jonathan Ross, President Sunny Madra, and other key team members will join Nvidia to help scale and advance the licensed technology. Importantly, the agreement does not grant Nvidia exclusive rights to Groq’s technology, nor does it involve the acquisition of Groq’s intellectual property. Nvidia has not yet officially commented on the partnership, while its shares rose modestly in premarket trading following the news.

Groq recently raised $750 million at a valuation of approximately $6.9 billion, making the reported $20 billion figure notable even for a licensing arrangement. Wall Street analysts have weighed in on the strategic implications. Bank of America analyst Vivek Arya said the deal reflects Nvidia’s recognition that while GPUs dominate AI training, inference workloads may increasingly benefit from specialized chips. Groq’s Language Processing Units, or LPUs, are designed for highly predictable and ultra-fast inference using large amounts of on-chip SRAM, contrasting with Nvidia’s general-purpose GPUs that rely on high-bandwidth memory for scalability.

Analysts suggest future Nvidia systems could integrate GPUs and LPUs within the same rack, potentially connected by NVLink. Others noted that while the price appears high for a non-exclusive license, it is relatively small compared with Nvidia’s massive cash position, free cash flow, and multi-trillion-dollar market capitalization. Overall, the deal is viewed as a strategic move to strengthen Nvidia’s position in the rapidly evolving AI inference market.

OpenAI Expands Enterprise AI Strategy With Major Hiring Push Ahead of New Business Offering

OpenAI Expands Enterprise AI Strategy With Major Hiring Push Ahead of New Business Offering  SpaceX Prioritizes Moon Mission Before Mars as Starship Development Accelerates

SpaceX Prioritizes Moon Mission Before Mars as Starship Development Accelerates  SpaceX Updates Starlink Privacy Policy to Allow AI Training as xAI Merger Talks and IPO Loom

SpaceX Updates Starlink Privacy Policy to Allow AI Training as xAI Merger Talks and IPO Loom  Elon Musk’s Empire: SpaceX, Tesla, and xAI Merger Talks Spark Investor Debate

Elon Musk’s Empire: SpaceX, Tesla, and xAI Merger Talks Spark Investor Debate  Nasdaq Proposes Fast-Track Rule to Accelerate Index Inclusion for Major New Listings

Nasdaq Proposes Fast-Track Rule to Accelerate Index Inclusion for Major New Listings  Elon Musk’s SpaceX Acquires xAI in Historic Deal Uniting Space and Artificial Intelligence

Elon Musk’s SpaceX Acquires xAI in Historic Deal Uniting Space and Artificial Intelligence  Nvidia CEO Jensen Huang Says AI Investment Boom Is Just Beginning as NVDA Shares Surge

Nvidia CEO Jensen Huang Says AI Investment Boom Is Just Beginning as NVDA Shares Surge  SoftBank Shares Slide After Arm Earnings Miss Fuels Tech Stock Sell-Off

SoftBank Shares Slide After Arm Earnings Miss Fuels Tech Stock Sell-Off  Tencent Shares Slide After WeChat Restricts YuanBao AI Promotional Links

Tencent Shares Slide After WeChat Restricts YuanBao AI Promotional Links  Oracle Plans $45–$50 Billion Funding Push in 2026 to Expand Cloud and AI Infrastructure

Oracle Plans $45–$50 Billion Funding Push in 2026 to Expand Cloud and AI Infrastructure  FDA Targets Hims & Hers Over $49 Weight-Loss Pill, Raising Legal and Safety Concerns

FDA Targets Hims & Hers Over $49 Weight-Loss Pill, Raising Legal and Safety Concerns  Palantir Stock Jumps After Strong Q4 Earnings Beat and Upbeat 2026 Revenue Forecast

Palantir Stock Jumps After Strong Q4 Earnings Beat and Upbeat 2026 Revenue Forecast  Rio Tinto Shares Hit Record High After Ending Glencore Merger Talks

Rio Tinto Shares Hit Record High After Ending Glencore Merger Talks  Nvidia, ByteDance, and the U.S.-China AI Chip Standoff Over H200 Exports

Nvidia, ByteDance, and the U.S.-China AI Chip Standoff Over H200 Exports  Alphabet’s Massive AI Spending Surge Signals Confidence in Google’s Growth Engine

Alphabet’s Massive AI Spending Surge Signals Confidence in Google’s Growth Engine