Samsung Electronics leaps into the artificial intelligence (AI) chip market, planning to mass-produce high-bandwidth memory (HBM) chips this year, potentially upsetting current leader SK hynix's market dominance with superior speed and capacity.

To catch up with SK hynix, Samsung is set to mass-produce HBM3 memory chips with 16-gigabyte and 24-gigabyte capacity. These chips are known to have the fastest data processing speeds on the market, clocking in at 6.4 Gigabits per second (Gbps). This speed aids in increasing the learning calculation speed of the server, which is necessary to implement AI services properly.

Samsung is also introducing new memory solutions, such as HBM-PIM, a high-bandwidth memory chip with integrated AI processing power, and CXL DRAM, which overcomes the limitations of DRAM capacity. With these new products and increased presence in the HBM market, Samsung is expected to increase its profitability and gain competitive ground in the sluggish memory chip market.

As AI services gain traction and continue to grow, demands for high-performance and high-capacity DRAM to support these services also increase. HBM chips are the solution to this problem.

Vertical stacking of multiple DRAMs gives HBM chips an edge regarding data processing speed compared to conventional DRAM. However, this advantage comes at a cost - HBM is around two to three times the price of a standard DRAM.

Samsung will launch the next generation of HBM3P in the second half of the year with higher performance and capacity demands from the market. Its products, with a processing speed of 6.4 Gigabits per second (Gbps), could give it an edge in increasing the learning calculation speed of the server. Samsung aims to supply GPU makers in North America starting in Q4 2022.

With the memory chip industry struggling with falling demand, Samsung's move to explore new memory solutions such as HBM-PIM and CXL DRAM is timely. As Samsung introduces new products to the HBM market, it can foreseeably boost its profitability and further establish itself.

TrendForce expects the HBM market to grow at an annual growth rate of up to 45 percent from 2022 to 2025, making this an exciting time in the high-bandwidth memory chip market.

According to industry analysts, the market leader, SK hynix, currently holds around a 50 percent market share, with Samsung accounting for 40 percent and Micron close behind with 10 percent. The HBM market is still new and only accounts for about 1 percent of the entire DRAM market. However, the industry is expected to grow at an annual rate of up to 45 percent from this year until 2025, according to market tracker TrendForce.

In the fourth quarter of this year, Samsung is expected to begin supplying HBM3 to GPU makers in North America. As the market for generative AI services grows, HBM chips used for AI servers are gaining traction in the memory chip industry. With the AI era in full swing, the demand for HBM products is expected to increase dramatically, making Samsung's move into the AI memory chip market well-timed and highly strategic.

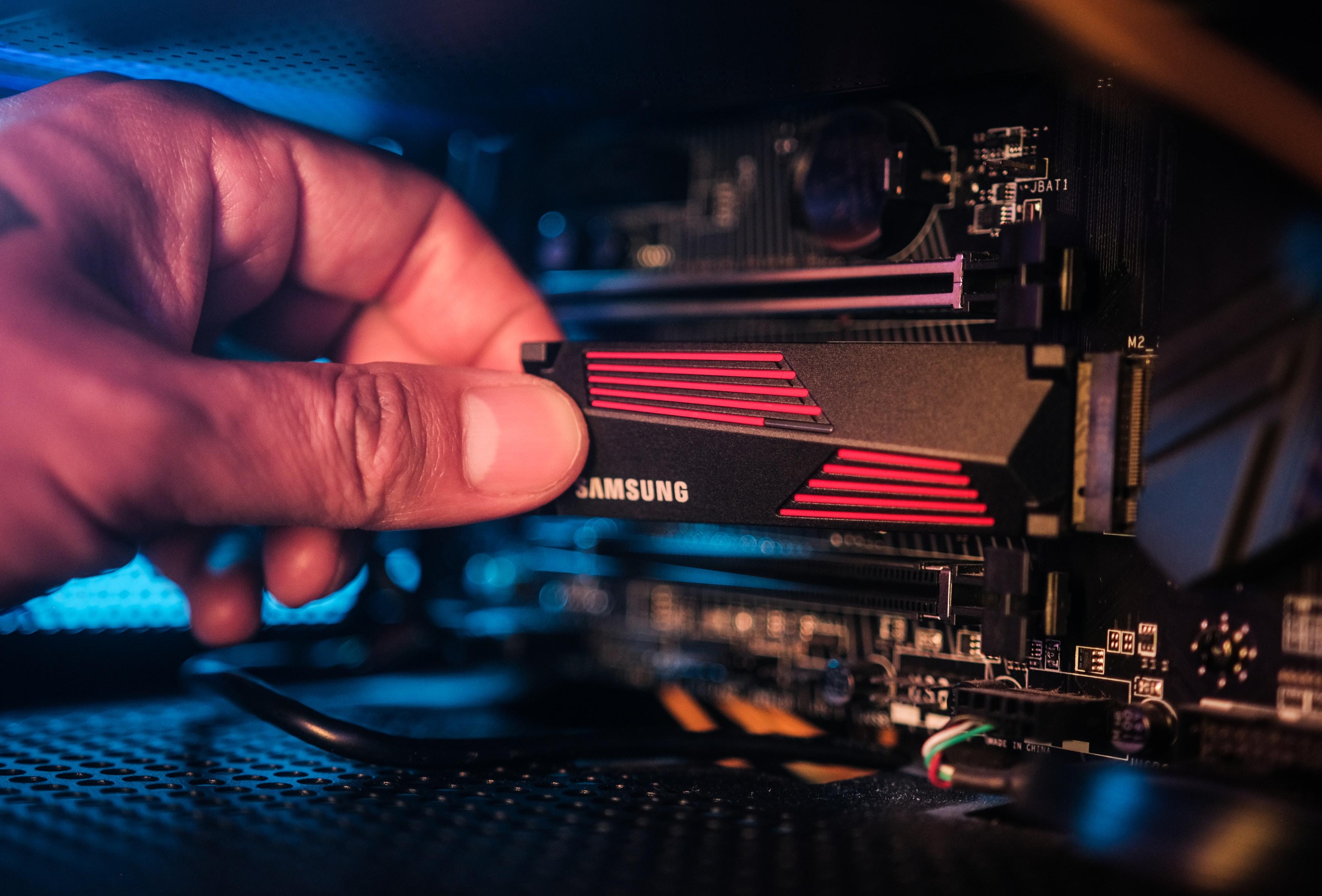

Photo: Samsung Memory/Unsplash

Once Upon a Farm Raises Nearly $198 Million in IPO, Valued at Over $724 Million

Once Upon a Farm Raises Nearly $198 Million in IPO, Valued at Over $724 Million  Nvidia Nears $20 Billion OpenAI Investment as AI Funding Race Intensifies

Nvidia Nears $20 Billion OpenAI Investment as AI Funding Race Intensifies  Australian Scandium Project Backed by Richard Friedland Poised to Support U.S. Critical Minerals Stockpile

Australian Scandium Project Backed by Richard Friedland Poised to Support U.S. Critical Minerals Stockpile  Trump Endorses Japan’s Sanae Takaichi Ahead of Crucial Election Amid Market and China Tensions

Trump Endorses Japan’s Sanae Takaichi Ahead of Crucial Election Amid Market and China Tensions  Uber Ordered to Pay $8.5 Million in Bellwether Sexual Assault Lawsuit

Uber Ordered to Pay $8.5 Million in Bellwether Sexual Assault Lawsuit  SoftBank Shares Slide After Arm Earnings Miss Fuels Tech Stock Sell-Off

SoftBank Shares Slide After Arm Earnings Miss Fuels Tech Stock Sell-Off  Silver Prices Plunge in Asian Trade as Dollar Strength Triggers Fresh Precious Metals Sell-Off

Silver Prices Plunge in Asian Trade as Dollar Strength Triggers Fresh Precious Metals Sell-Off  Gold Prices Slide Below $5,000 as Strong Dollar and Central Bank Outlook Weigh on Metals

Gold Prices Slide Below $5,000 as Strong Dollar and Central Bank Outlook Weigh on Metals  Gold and Silver Prices Slide as Dollar Strength and Easing Tensions Weigh on Metals

Gold and Silver Prices Slide as Dollar Strength and Easing Tensions Weigh on Metals  RBI Holds Repo Rate at 5.25% as India’s Growth Outlook Strengthens After U.S. Trade Deal

RBI Holds Repo Rate at 5.25% as India’s Growth Outlook Strengthens After U.S. Trade Deal  U.S. Stock Futures Edge Higher as Tech Rout Deepens on AI Concerns and Earnings

U.S. Stock Futures Edge Higher as Tech Rout Deepens on AI Concerns and Earnings  Vietnam’s Trade Surplus With US Jumps as Exports Surge and China Imports Hit Record

Vietnam’s Trade Surplus With US Jumps as Exports Surge and China Imports Hit Record  Trump Lifts 25% Tariff on Indian Goods in Strategic U.S.–India Trade and Energy Deal

Trump Lifts 25% Tariff on Indian Goods in Strategic U.S.–India Trade and Energy Deal  Nintendo Shares Slide After Earnings Miss Raises Switch 2 Margin Concerns

Nintendo Shares Slide After Earnings Miss Raises Switch 2 Margin Concerns  Asian Stocks Slip as Tech Rout Deepens, Japan Steadies Ahead of Election

Asian Stocks Slip as Tech Rout Deepens, Japan Steadies Ahead of Election  Toyota’s Surprise CEO Change Signals Strategic Shift Amid Global Auto Turmoil

Toyota’s Surprise CEO Change Signals Strategic Shift Amid Global Auto Turmoil  Asian Markets Slip as AI Spending Fears Shake Tech, Wall Street Futures Rebound

Asian Markets Slip as AI Spending Fears Shake Tech, Wall Street Futures Rebound