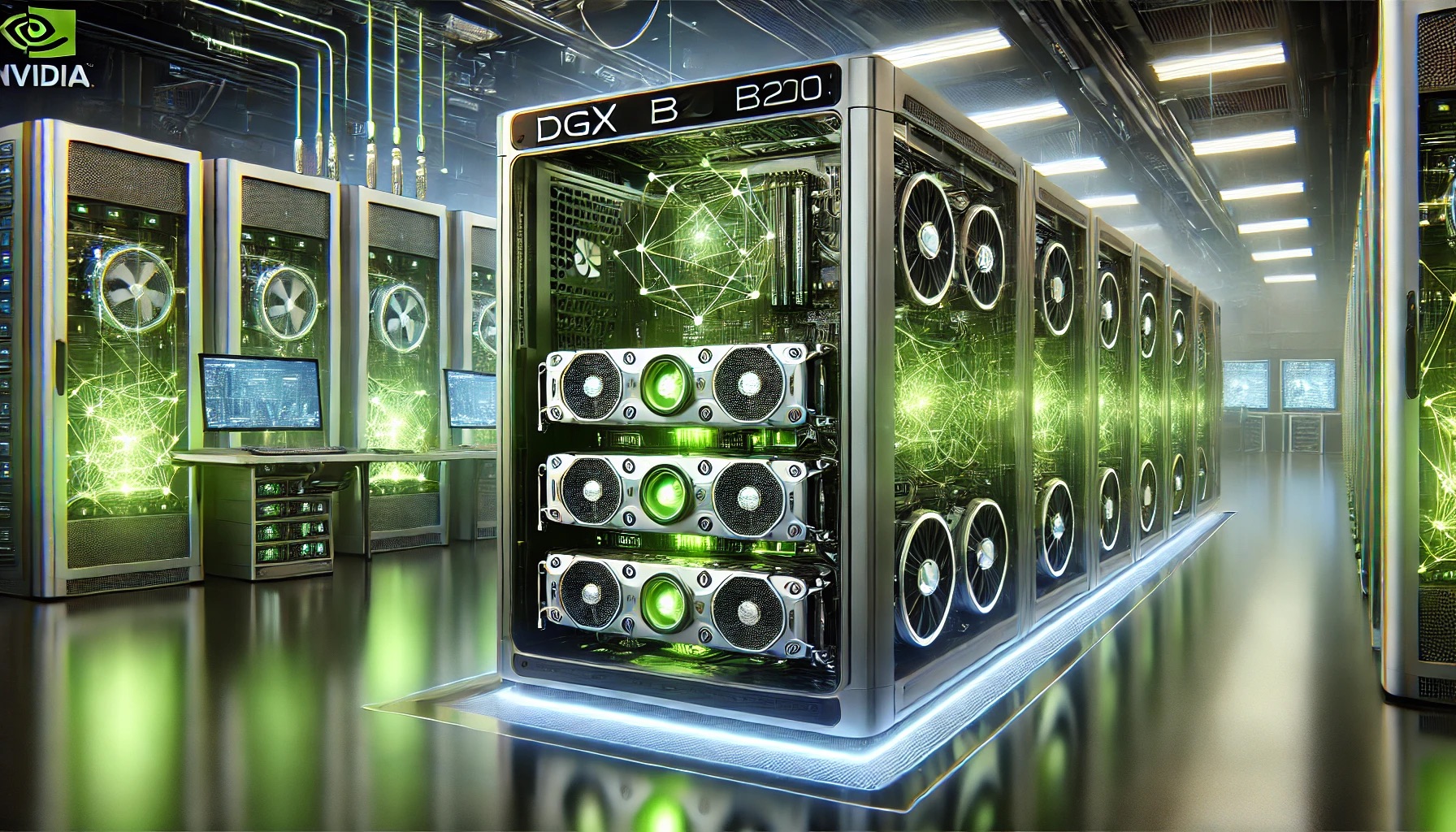

In a significant leap for artificial intelligence, OpenAI has obtained one of the first NVIDIA Blackwell DGX B200 systems. The cutting-edge GPUs are poised to accelerate the training and performance of OpenAI's advanced AI models.

OpenAI Boosts AI Power With Early NVIDIA DGX B200 System

B200 cards, which use NVIDIA's Blackwell architecture, are selling like hotcakes.

The B200 GPUs are NVIDIA's fastest data center GPUs to date, and orders for them have begun to roll in from a number of multinational corporations. According to NVIDIA, OpenAI was going to use the B200 GPUs. The company appears to be aiming to boost its AI computing capabilities by taking advantage of the B200's groundbreaking performance.

OpenAI Showcases NVIDIA's Blackwell System for AI Innovation

Earlier today, OpenAI's official X handle shared a photo of its staff with an early DGX B200 engineering sample. They are now ready to put the B200 to the test and train their formidable AI models now that the platform has arrived at their office.

The DGX B200 is an all-in-one AI platform that will make use of the forthcoming Blackwell B200 GPUs for training, fine-tuning, and inference. With a maximum HBM3E memory bandwidth of 64 TB/s and eight B200 GPUs per DGX B200, each unit may provide GPU memory of up to 1.4 TB.

Blackwell GPUs Power Major Industry Players' AI Ambitions

The DGX B200, according to NVIDIA, can provide remarkable performance for AI models, with training speeds of up to 72 petaFLOPS and inference speeds of up to 144 petaFLOPS.

Blackwell GPUs have long piqued the curiosity of OpenAI, and CEO Sam Altman even hinted about the possibility of employing them to train their AI models at one point.

Global Tech Giants Jump on the Blackwell Bandwagon

With so many industry heavyweights already opting to use Blackwell GPUs to train their AI models, the firm certainly won't be left out. Amazon, Google, Meta, Microsoft, Google, Tesla, xAI, and Dell Technologies are all part of this pack.

WCCFTECH has previously stated that, in addition to the 100,000 H100 GPUs now in use, xAI intends to use 50,000 B200 GPUs. Using the B200 GPUs, Foxconn has now also stated that it will construct the fastest supercomputer in Taiwan.

NVIDIA B200 Outshines Previous Generations in Power Efficiency

When compared to NVIDIA Hopper GPUs, Blackwell is both more powerful and more power efficient, making it an ideal choice for OpenAI's AI model training.

According to NVIDIA, the DGX B200 is capable of handling LLMs, chatbots, and recommender systems, and it boasts three times the training performance and fifteen times the inference performance of earlier generations.

Apple to Begin Mac Mini Production in Texas Amid $600 Billion U.S. Investment Plan

Apple to Begin Mac Mini Production in Texas Amid $600 Billion U.S. Investment Plan  FedEx Faces Class Action Lawsuit Over Tariff Refunds After Supreme Court Ruling

FedEx Faces Class Action Lawsuit Over Tariff Refunds After Supreme Court Ruling  Anthropic Refuses Pentagon Request to Remove AI Safeguards Amid Defense Contract Dispute

Anthropic Refuses Pentagon Request to Remove AI Safeguards Amid Defense Contract Dispute  Microsoft Gaming Leadership Overhaul: Phil Spencer Retires, Asha Sharma Named New Xbox CEO

Microsoft Gaming Leadership Overhaul: Phil Spencer Retires, Asha Sharma Named New Xbox CEO  Samsung and SK Hynix Shares Hit Record Highs as Nvidia Earnings Boost AI Chip Demand

Samsung and SK Hynix Shares Hit Record Highs as Nvidia Earnings Boost AI Chip Demand  Nintendo Share Sale: MUFG and Bank of Kyoto to Sell Stakes in Strategic Unwinding

Nintendo Share Sale: MUFG and Bank of Kyoto to Sell Stakes in Strategic Unwinding  Toyota Plans $19 Billion Share Sale in Major Corporate Governance Reform Move

Toyota Plans $19 Billion Share Sale in Major Corporate Governance Reform Move  Nvidia Earnings Beat Expectations as AI Demand Surges, Stock Rises on Strong Revenue Outlook

Nvidia Earnings Beat Expectations as AI Demand Surges, Stock Rises on Strong Revenue Outlook  Coupang Reports Q4 Loss After Data Breach, Revenue Misses Estimates

Coupang Reports Q4 Loss After Data Breach, Revenue Misses Estimates  Pentagon Weighs Supply Chain Risk Designation for Anthropic Over Claude AI Use

Pentagon Weighs Supply Chain Risk Designation for Anthropic Over Claude AI Use  Amazon’s $50B OpenAI Investment Tied to AGI Milestone and IPO Plans

Amazon’s $50B OpenAI Investment Tied to AGI Milestone and IPO Plans  xAI’s Grok Secures Pentagon Deal for Classified Military AI Systems Amid Anthropic Dispute

xAI’s Grok Secures Pentagon Deal for Classified Military AI Systems Amid Anthropic Dispute  OpenAI Pentagon AI Contract Adds Safeguards Amid Anthropic Dispute

OpenAI Pentagon AI Contract Adds Safeguards Amid Anthropic Dispute  Trump Warns Iran as Gulf Conflict Disrupts Oil Markets and Global Trade

Trump Warns Iran as Gulf Conflict Disrupts Oil Markets and Global Trade  FAA Plans Flight Reductions at Chicago O’Hare as Airlines Ramp Up Summer Schedules

FAA Plans Flight Reductions at Chicago O’Hare as Airlines Ramp Up Summer Schedules