In the UK, a quarter of people who take their own lives were in contact with a health professional the previous week, and most have spoken to someone within the last month. Yet assessing patient suicide risk remains extremely difficult.

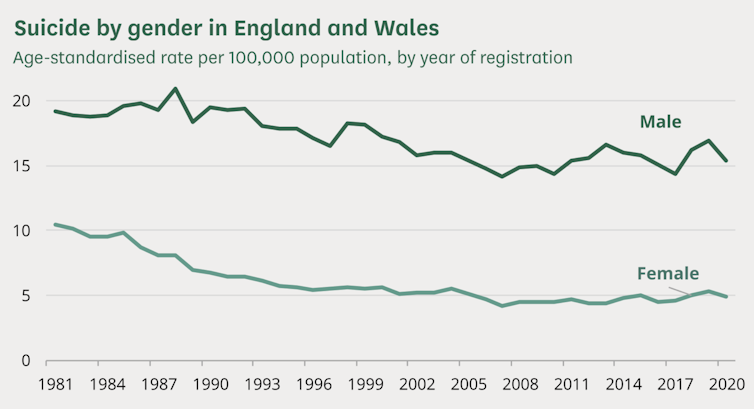

There were 5,219 recorded deaths by suicide in England in 2021. While the suicide rate in England and Wales has declined by around 31% since 1981, the majority of this decrease happened before 2000. Suicide is three times more common in men than in women, and this gap has increased over time.

Suicide statistics in England and Wales from 1981 to 2021. House of Commons - Suicide Statistics Research Briefing, October 12 2021

A study conducted in October 2022, led by the Black Dog Institute in the University of New South Wales, found artificial intelligence (AI) models outperformed clinical risk assessments. It surveyed 56 studies from 2002 to 2021 and found AI correctly predicted 66% of people who would experience a suicide outcome and predicted 87% of people who would not. In comparison, traditional scoring methods carried out by health professionals are only slightly better than random.

AI is widely researched in other medical domains such as cancer. However, despite their promise, AI models for mental health are yet to be widely used in clinical settings.

Why suicide prediction is so difficult

A 2019 study from the Karolinska Institutet in Sweden found four traditional scales used to predict suicide risk after recent episodes of self-harm performed poorly. The challenge of suicide prediction stems from the fact that a patient’s intent can change rapidly.

The guidance on self-harm used by health professionals in England explicitly states suicide risk assessment tools and scales should not be relied upon. Instead, professionals should use a clinical interview. While doctors do carry out structured risk assessments, they are used to make the most of interviews rather than providing a scale to determine who gets treatment.

The risk of AI

The study from the Black Dog Institute showed promising results, but if 50 years of research into traditional (non-AI) prediction yielded methods that were only slightly better than random, we need to ask whether we should trust AI. When a new development gives us something we want (in this case better suicide risk assessments) it can be tempting to stop asking questions. But we can’t afford to rush this technology. The consequences of getting it wrong are literally life and death.

There will never be a perfect risk assessment. Chanintorn.v/Shutterstock

AI models always have limitations, including how their performance is evaluated. For example, using accuracy as a metric can be misleading if the dataset is unbalanced. A model can achieve 99% accuracy by always predicting there will be no risk of suicide if only 1% of the patients in the dataset are high risk.

It’s also essential to assess AI models on different data to that they are trained on. This is to avoid overfitting, where models can learn to perfectly predict results from training material but struggle to work with new data. Models may have worked flawlessly during development, but make incorrect diagnoses for real patients.

For example, AI was found to overfit to surgical markings on a patient’s skin when used to detect melanoma (a type of skin cancer). Doctors use blue pens to highlight suspicious lesions, and the AI learnt to associate these markings with a higher probability of cancer. This led to misdiagnosis in practice when blue highlighting wasn’t used.

It can also be difficult to understand what AI models have learnt, such as why it’s predicting a particular level of risk. This is a prolific problem with AI systems in general, and has a lead to a whole field of research known as explainable AI.

The Black Dog Institute found 42 out of the 56 studies analysed had high risk of bias. In this scenario, a bias means the model over or under predicts the average rate of suicide. For example, the data has a 1% suicide rate, but the model predicts a 5% rate. High bias leads to misdiagnosis, either missing patients that are high risk, or over assigning risk to low-risk patients.

These biases stem from factors such as participant selection. For example, several studies had high case-control ratios, meaning the rate of suicides in the study was higher than in reality, so the AI model was likely to assign too much risk to patients.

A promising outlook

The models mostly used data from electronic health records. But some also included data from interviews, self-report surveys, and clinical notes. The benefit of using AI is that it can learn from large amounts of data faster and more efficiently than humans, and spot patterns missed by overworked health professionals.

While progress is being made, the AI approach to suicide prevention isn’t ready to be used in practice. Researchers are already working to address many of the issues with AI suicide prevention models, such as how hard it is to explain why algorithms made their predictions.

However, suicide prediction is not the only way to reduce suicide rates and save lives. An accurate prediction does not help if it doesn’t lead to effective intervention.

On its own, suicide prediction with AI is not going to prevent every death. But it could give mental health professionals another tool to care for their patients. It could be as life changing as state-of-the-art heart surgery if it raised the alarm for overlooked patients.

Nintendo Shares Slide After Earnings Miss Raises Switch 2 Margin Concerns

Nintendo Shares Slide After Earnings Miss Raises Switch 2 Margin Concerns  Anthropic Eyes $350 Billion Valuation as AI Funding and Share Sale Accelerate

Anthropic Eyes $350 Billion Valuation as AI Funding and Share Sale Accelerate  SpaceX Reports $8 Billion Profit as IPO Plans and Starlink Growth Fuel Valuation Buzz

SpaceX Reports $8 Billion Profit as IPO Plans and Starlink Growth Fuel Valuation Buzz  Nvidia Nears $20 Billion OpenAI Investment as AI Funding Race Intensifies

Nvidia Nears $20 Billion OpenAI Investment as AI Funding Race Intensifies  Alphabet’s Massive AI Spending Surge Signals Confidence in Google’s Growth Engine

Alphabet’s Massive AI Spending Surge Signals Confidence in Google’s Growth Engine  Palantir Stock Jumps After Strong Q4 Earnings Beat and Upbeat 2026 Revenue Forecast

Palantir Stock Jumps After Strong Q4 Earnings Beat and Upbeat 2026 Revenue Forecast  SoftBank and Intel Partner to Develop Next-Generation Memory Chips for AI Data Centers

SoftBank and Intel Partner to Develop Next-Generation Memory Chips for AI Data Centers  Nvidia CEO Jensen Huang Says AI Investment Boom Is Just Beginning as NVDA Shares Surge

Nvidia CEO Jensen Huang Says AI Investment Boom Is Just Beginning as NVDA Shares Surge  SpaceX Updates Starlink Privacy Policy to Allow AI Training as xAI Merger Talks and IPO Loom

SpaceX Updates Starlink Privacy Policy to Allow AI Training as xAI Merger Talks and IPO Loom  OpenAI Expands Enterprise AI Strategy With Major Hiring Push Ahead of New Business Offering

OpenAI Expands Enterprise AI Strategy With Major Hiring Push Ahead of New Business Offering  TSMC Eyes 3nm Chip Production in Japan with $17 Billion Kumamoto Investment

TSMC Eyes 3nm Chip Production in Japan with $17 Billion Kumamoto Investment  SoftBank Shares Slide After Arm Earnings Miss Fuels Tech Stock Sell-Off

SoftBank Shares Slide After Arm Earnings Miss Fuels Tech Stock Sell-Off  Jensen Huang Urges Taiwan Suppliers to Boost AI Chip Production Amid Surging Demand

Jensen Huang Urges Taiwan Suppliers to Boost AI Chip Production Amid Surging Demand  Elon Musk’s SpaceX Acquires xAI in Historic Deal Uniting Space and Artificial Intelligence

Elon Musk’s SpaceX Acquires xAI in Historic Deal Uniting Space and Artificial Intelligence  Nvidia, ByteDance, and the U.S.-China AI Chip Standoff Over H200 Exports

Nvidia, ByteDance, and the U.S.-China AI Chip Standoff Over H200 Exports  SpaceX Prioritizes Moon Mission Before Mars as Starship Development Accelerates

SpaceX Prioritizes Moon Mission Before Mars as Starship Development Accelerates