NVIDIA Corporation (NASDAQ: NVDA) used the CES 2026 convention in Las Vegas to reaffirm its leadership in artificial intelligence infrastructure, announcing that its next-generation Rubin data center platform is now in full production and on track for release later this year. The move highlights Nvidia’s accelerated release cycle as competition intensifies from rivals such as Advanced Micro Devices (NASDAQ: AMD) and custom silicon developed by major cloud providers.

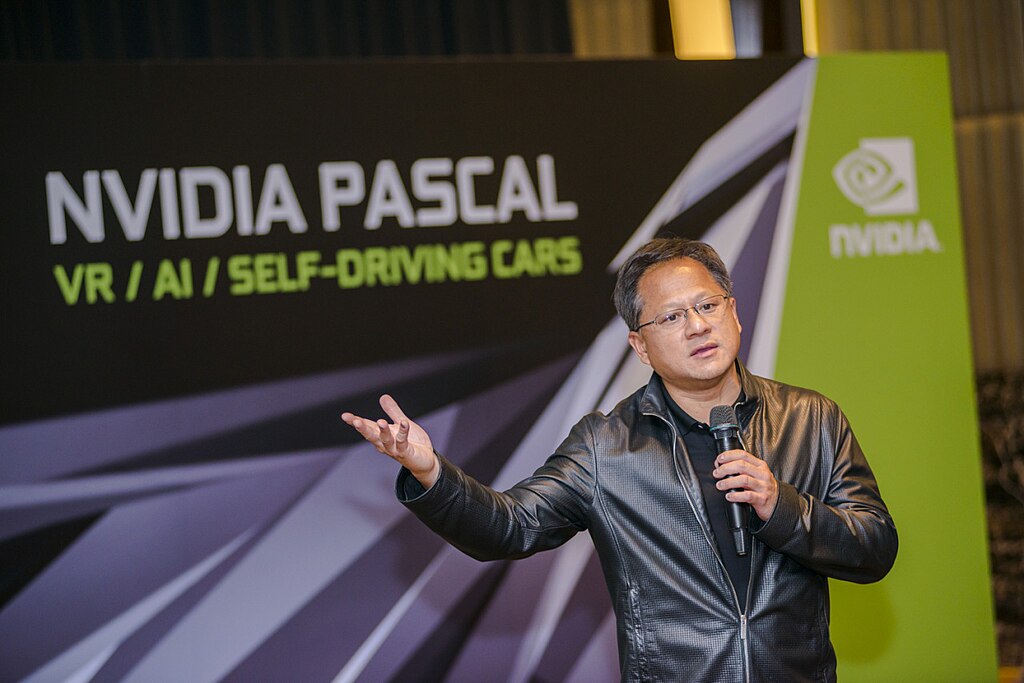

During his keynote address, CEO Jensen Huang revealed that all six chips in the Rubin platform have successfully returned from manufacturing partners and passed initial milestone tests. This puts the new AI accelerator systems on schedule for customer deployments in the second half of 2026. By unveiling Rubin early, Nvidia is signaling confidence in its roadmap while keeping enterprises closely aligned with its hardware ecosystem.

The Rubin GPU is designed to meet the growing demands of agentic AI models, which rely on multistep reasoning rather than simple pattern recognition. According to Nvidia, Rubin delivers 3.5 times faster AI training performance and up to 5 times higher inference performance compared to the current Blackwell architecture. The platform also introduces the new Vera CPU, featuring 88 custom cores and offering double the performance of its predecessor. Nvidia says Rubin-based systems can achieve the same results as Blackwell while using far fewer components, reducing cost per token by as much as tenfold.

Positioned as a modular “AI factory” or “supercomputer in a box,” the Rubin platform integrates the BlueField-4 DPU, which manages AI-native storage and long-term context memory. This design improves power efficiency by up to five times, a critical factor for hyperscale data centers. Early adopters include Microsoft (NASDAQ: MSFT), Amazon AWS (NASDAQ: AMZN), Google Cloud (NASDAQ: GOOGL), and Oracle Cloud Infrastructure (NYSE: ORCL).

Beyond data centers, Nvidia also highlighted major advances in robotics and autonomous vehicles, calling the current period a “ChatGPT moment” for physical AI. New offerings such as Alpamayo AI models for self-driving systems and the Jetson T4000 robotics module further underscore Nvidia’s bet that reasoning-based AI will drive a massive, trillion-dollar infrastructure upgrade across industries.

OpenAI and U.S. Defense Department Update Agreement to Clarify AI Usage Terms

OpenAI and U.S. Defense Department Update Agreement to Clarify AI Usage Terms  Rio Tinto Advances Gallium Extraction Project in Canada with Federal Funding Support

Rio Tinto Advances Gallium Extraction Project in Canada with Federal Funding Support  OpenAI Explores New Code-Hosting Platform to Reduce Dependence on GitHub

OpenAI Explores New Code-Hosting Platform to Reduce Dependence on GitHub  Australia Targets AI Platforms With Strict Age Verification Rules

Australia Targets AI Platforms With Strict Age Verification Rules  DBS Wins Key Licence to Underwrite Corporate Bonds in China’s Interbank Market

DBS Wins Key Licence to Underwrite Corporate Bonds in China’s Interbank Market  Nvidia Earnings Beat Expectations as AI Demand Surges, Stock Rises on Strong Revenue Outlook

Nvidia Earnings Beat Expectations as AI Demand Surges, Stock Rises on Strong Revenue Outlook  U.S. Officials Review Tencent’s Stakes in Epic Games, Riot Games Over Security Concerns

U.S. Officials Review Tencent’s Stakes in Epic Games, Riot Games Over Security Concerns  APEX Tech Acquisition Inc. Raises $111.97 Million in NYSE IPO Under Ticker TRADU

APEX Tech Acquisition Inc. Raises $111.97 Million in NYSE IPO Under Ticker TRADU  Blackstone Expands BCRED Investor Payouts Amid Rising Private Credit Market Concerns

Blackstone Expands BCRED Investor Payouts Amid Rising Private Credit Market Concerns  Synopsys Q2 Revenue Forecast Misses Expectations Amid China Export Curbs and AI Shift

Synopsys Q2 Revenue Forecast Misses Expectations Amid China Export Curbs and AI Shift  Lynas Rare Earths Shares Surge 7% After Malaysia Renews Processing Plant Licence for 10 Years

Lynas Rare Earths Shares Surge 7% After Malaysia Renews Processing Plant Licence for 10 Years  Nintendo Share Sale: MUFG and Bank of Kyoto to Sell Stakes in Strategic Unwinding

Nintendo Share Sale: MUFG and Bank of Kyoto to Sell Stakes in Strategic Unwinding  OpenAI Hires Former Meta and Apple AI Leader Ruomin Pang Amid Intensifying AI Talent War

OpenAI Hires Former Meta and Apple AI Leader Ruomin Pang Amid Intensifying AI Talent War  The Pentagon strongarmed AI firms before Iran strikes – in dark news for the future of ‘ethical AI’

The Pentagon strongarmed AI firms before Iran strikes – in dark news for the future of ‘ethical AI’  Trump Orders Federal Agencies to Halt Use of Anthropic AI Technology

Trump Orders Federal Agencies to Halt Use of Anthropic AI Technology  Malta will gain from smart heritage

Malta will gain from smart heritage  Federal Judge Blocks Virginia Social Media Age Verification Law Over First Amendment Concerns

Federal Judge Blocks Virginia Social Media Age Verification Law Over First Amendment Concerns