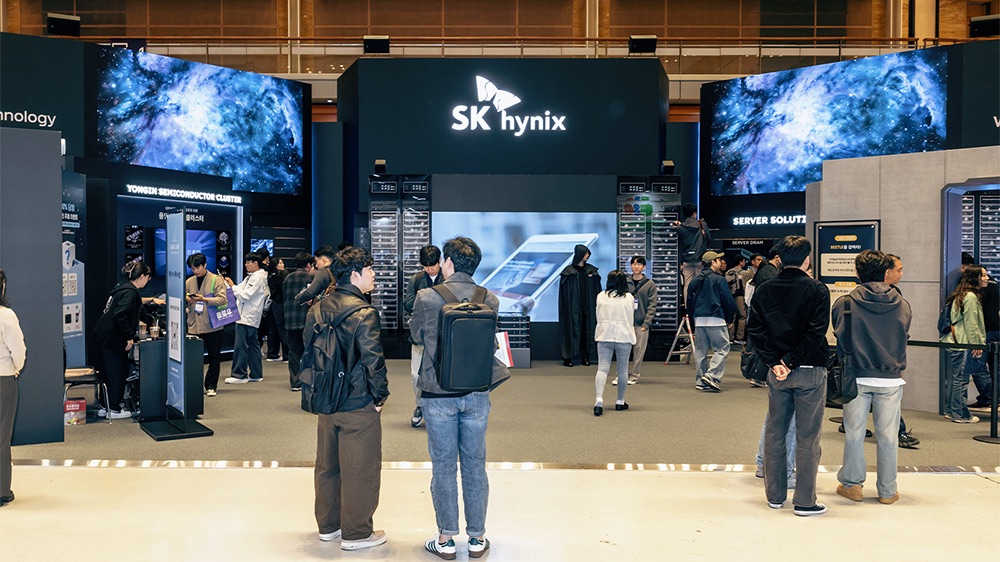

At the SK AI Summit 2024, SK hynix CEO Kwak Noh-Jung unveiled the world's first 16-high 48GB HBM3E memory solution, pushing AI memory capabilities to unprecedented levels. The advanced HBM3E solution promises enhanced performance in AI accelerators, with initial samples ready by early 2025.

SK hynix Unveils New 16-Hi HBM3E Memory Solution

At the SK AI Summit 2024, CEO Kwak Noh-Jung announced the 16-Hi HBM3E memory. During the event, Kwak presented the 16-Hi HBM3E solution.

Providing 48 GB capacity samples, which is the industry standard for HBM products and has the most layers. It is anticipated that the initial samples of this memory expansion technology would be distributed in early 2025.

Highest Capacity and Layer Count in the Industry

While speaking at the SK AI Summit in Seoul, CEO Kwak Noh-Jung unveiled the company's first 48GB 16-high product, the HBM3E, which had 12 layers, and the industry's first 48GB 16-high product, the industry record.

Here is a rundown of what Mr. Kwak had to say, according to WCCFTECH:

- Although the 16-high HBM market is anticipated to unlock with the HBM4 generation, SK hynix is preparing to offer 48GB 16-high HBM3E samples to customers early next year in an effort to achieve technological stability.

- SK hynix developed hybrid bonding technology as a backup and used the Advanced MR-MUF method, which allowed for mass manufacture of 12-high products, to create 16-high HBM3E.

- Products with 16 stars have a 32% improvement in inference performance and an 18% improvement in training performance compared to products with 12 stars. The 16 high-quality solutions are anticipated to contribute to the company's continued leadership in AI memory as the market for AI inference accelerators is anticipated to grow.

- SK hynix is capitalizing on its low-power and high-performance product competitiveness by creating an LPCAMM2 module for PCs and data centers, as well as 1cnm-based LPDDR5 and LPDDR6.

- As for other products, the business is getting ready to release UFS5.0, high-capacity QLC-based eSSD, and PCIe 6th generation SSD.

- In order to offer consumers the finest products, SK hynix is planning to implement a logic process on the base die from the HBM4 generation in partnership with a leading global logic foundry.

- In anticipation of a paradigm shift in artificial intelligence memory, customized HBM will be a high-performance solution that caters to specific user needs in terms of capacity, bandwidth, and functionality.

- To get around this "memory wall," SK hynix is working on a solution that augments memory with computational capabilities. A formidable obstacle that will alter the foundation of AI systems of the future and the course of the AI industry as a whole are technologies like Computational Storage, Processing in Memory (PIM), and Processing Near Memory (PNM), which are crucial for handling massive data sets in the future.

Anthropic Refuses Pentagon Request to Remove AI Safeguards Amid Defense Contract Dispute

Anthropic Refuses Pentagon Request to Remove AI Safeguards Amid Defense Contract Dispute  DeepSeek AI Model Trained on Nvidia Blackwell Chip Sparks U.S. Export Control Concerns

DeepSeek AI Model Trained on Nvidia Blackwell Chip Sparks U.S. Export Control Concerns  OpenAI Faces Scrutiny After Banning ChatGPT Account of Tumbler Ridge Shooting Suspect

OpenAI Faces Scrutiny After Banning ChatGPT Account of Tumbler Ridge Shooting Suspect  FAA Plans Flight Reductions at Chicago O’Hare as Airlines Ramp Up Summer Schedules

FAA Plans Flight Reductions at Chicago O’Hare as Airlines Ramp Up Summer Schedules  BlueScope Steel Shares Drop After Rejecting Revised A$15 Billion Takeover Bid

BlueScope Steel Shares Drop After Rejecting Revised A$15 Billion Takeover Bid  Samsung and SK Hynix Shares Hit Record Highs as Nvidia Earnings Boost AI Chip Demand

Samsung and SK Hynix Shares Hit Record Highs as Nvidia Earnings Boost AI Chip Demand  Trump Orders Federal Agencies to Halt Use of Anthropic AI Technology

Trump Orders Federal Agencies to Halt Use of Anthropic AI Technology  Nvidia Earnings Beat Expectations as AI Demand Surges, Stock Rises on Strong Revenue Outlook

Nvidia Earnings Beat Expectations as AI Demand Surges, Stock Rises on Strong Revenue Outlook  Panama Investigates CK Hutchison’s Port Unit After Court Voids Canal Contracts

Panama Investigates CK Hutchison’s Port Unit After Court Voids Canal Contracts  OpenAI Hires Former Meta and Apple AI Leader Ruomin Pang Amid Intensifying AI Talent War

OpenAI Hires Former Meta and Apple AI Leader Ruomin Pang Amid Intensifying AI Talent War  OpenAI Targets $600B Compute Spend as IPO Valuation Could Reach $1 Trillion

OpenAI Targets $600B Compute Spend as IPO Valuation Could Reach $1 Trillion  xAI’s Grok Secures Pentagon Deal for Classified Military AI Systems Amid Anthropic Dispute

xAI’s Grok Secures Pentagon Deal for Classified Military AI Systems Amid Anthropic Dispute  Paramount Skydance to Acquire Warner Bros Discovery in $110 Billion Media Mega-Deal

Paramount Skydance to Acquire Warner Bros Discovery in $110 Billion Media Mega-Deal  Netflix Declines to Raise Bid for Warner Bros. Discovery Amid Competing Paramount Skydance Offer

Netflix Declines to Raise Bid for Warner Bros. Discovery Amid Competing Paramount Skydance Offer  Samsung Electronics Stock Poised for $1 Trillion Valuation Amid AI and Memory Boom

Samsung Electronics Stock Poised for $1 Trillion Valuation Amid AI and Memory Boom  FedEx Faces Class Action Lawsuit Over Tariff Refunds After Supreme Court Ruling

FedEx Faces Class Action Lawsuit Over Tariff Refunds After Supreme Court Ruling